Let's say you are building a temperature analytics system. You are working on both the hardware and software parts of it. As for the IoT devices to measure temperature, you decide to have one with a thermistor and one with a thermocouple. Rationale being, if one technology fails, the other one will carry you forward. This is called the 1-out-of-2 principle, or Diverse Redundancy in this case. Quite intuitive, in fact, the gold standard in hardware systems.

But then, you decide to follow the same thing in your software system. You decide to build two completely different implementations of the same temperature analytics component that do the exact same thing. Pause for some time and think about whether this is a good idea... The software safety standards actually advise against it, and the main reason is the nature of the failure.

Hardware usually breaks because of physics. A fan gets dusty, A capacitor leaks, a battery loses capacity, or moving parts wear out. If there is one electronic sensor and one mechanical switch, they are unlikely to share the same physical "blind spot." Thus, a hardware failure is almost an independent event.

Software is different. Software doesn't break because of physics; it breaks because of math and misunderstanding. The Knight-Leveson study found that even when different teams write software independently, they tend to make the same logical errors in the same difficult parts of the requirements. If the specification says, "Handle the edge case of a 0.001ms delay," and that requirement is hard to implement, both teams will likely introduce a bug at that exact point. Diversity doesn't protect you from a flawed blueprint.

The second and most obvious yet intriguing reason is "Software Maintenance". If you have two software versions, you now have twice as much code to maintain, verify, and document. I assume you'd already thought about this when I asked you to pause earlier. But why is software maintenance such a huge pain in the ass? How is it different from hardware maintenance?

In software, "maintenance" is a misnomer if you are from a hardware background. You aren't usually fixing something that wore out. You’re fixing something that was never actually right to begin with, or you're changing it because the world around it moved. When you change one line of code in a large system, you aren't just swapping a part; you are potentially changing the meaning of every other line that touches it. This is why software has "side effects" and hardware generally doesn't.

When a hardware fails, the failure is usually tangible; it's pretty evident to see if anything has burnt or worn out. But when a Python script fails because of a race condition, there is no "smoke". The bug only exists in time. You have to build a mental model of the entire state machine just to see the ghost.

Hardware eventually reaches a "steady state." Once a server is racked and cooled, it stays the same for years. Software, however, sits in a soup of changing dependencies. Your uv environment might be locked, but the OS, the APIs it calls, and the security exploits discovered yesterday are all shifting. To keep software "the same," you have to change it constantly.

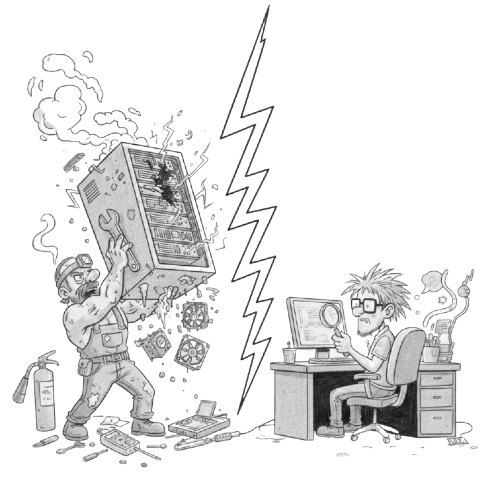

In the hardware world, you're a mechanic. In the software world, you're a historian and a detective trying to figure out what a version of yourself from three years ago was thinking. We try to solve software problems with hardware solutions because we're still thinking like mechanics. But code isn't a machine; it's a thought process. If you want to solve puzzles that never end, maintain software. Just don't be surprised when the puzzle starts fighting back.